One icy morning in February, nearly 200 people gathered in a church in downtown Richmond, Va. Most had awakened before dawn and driven in from across the state. There were Republicans and Democrats from rural farms and D.C. exurbs. They shared one goal: to fight back against AI development in a region with the largest concentration of data centers in the world. “Aren’t you tired of being ignored by both parties, and having your quality of life and your environment absolutely destroyed by corporate greed?” state senator Danica Roem said, to a standing ovation.

[time-brightcove not-tgx=”true”]

The activists—wearing homemade shirts with slogans like Boondoggle: Data Center in Botetourt County—marched to the state capitol and spent the day testifying to lawmakers about their fears over data centers’ impacts on electricity, water, noise pollution, and more. Some lawmakers pledged to help: “You’re getting a sh-t deal,” state delegate John McAuliff told activists.

The phrase captured many people’s feelings toward the AI industry as a whole. Not much unites Americans these days. But a growing cross section of the public—from MAGA loyalists to Democratic socialists, pastors to policymakers, nurses to filmmakers—agree on at least one thing: AI is moving too fast. While most Americans use the tools, the U.S. is one of the most AI-pessimistic countries in the world. A 2025 Pew poll found five times as many Americans are concerned as are excited about the increased use of AI in daily life. The public thinks AI will worsen our ability to think creatively, form meaningful relationships, and make difficult decisions, Pew found. Other surveys show Americans believe AI will spread misinformation, erode our sense of purpose and meaning, and harm our social and emotional intelligence.

Industry boosters argue the U.S. is in a race with China for technological supremacy, and thus the sprint has existential stakes. But many Americans view AI through the lens of issues much closer to home: skyrocketing electricity bills, looming job displacement, teenage chatbot addiction. Last October, after 134,000 people signed a statement calling for a halt to the development of superintelligence, “I was thinking, why are we getting military people, faith leaders, and everyone signing?” says Max Tegmark, a physicist whose nonprofit organization, The Future of Life Institute, issued the statement. “And then it hit me: they’re all rooting for Team Human instead of Team Machine.”

It’s easy to feel like Team Machine is winning. Big Tech companies are putting out increasingly powerful AI models and amassing billions of dollars in investments. (TIME has a licensing and technology agreement that allows OpenAI to access TIME’s archives.) The companies are being cheered on by the Trump Administration, which sees AI as key to geopolitical dominance.

Tech leaders, of course, say that they are on Team Human: that society’s productivity, happiness, and even health will be improved by ultrasmart, benevolent digital assistants. But many people don’t believe them—especially as some of these companies embrace tactics to juice revenue and usership, like erotica, deepfake generation, and in-chatbot ads. So AI’s critics are taking matters into their own hands. In the hopes of slowing the runaway hype train, they are staging rallies and packing town halls, delivering sermons, writing contract protections, filing lawsuits, and running for office. As physical manifestations of the industry’s heedlessness, the data centers powering AI systems have become the focus of protests. From Virginia to Indiana to Arizona, activists stalled $98 billion in data-center projects in the second quarter of 2025 alone, researchers at Data Center Watch found. “Every day I hear from someone with a different reason for fighting a data center,” says Saul Levin, a D.C.-based organizer.

AI companies have responded by implementing some guardrails, including around age verification, electricity costs, and sexualized imagery. But skepticism abounds. “I’m not sure we can trust these guys as an industry overall to make changes without further pressure,” says Maria Raine, who, along with her husband Matthew, sued Open AI after their son Adam died by suicide after seeking guidance from ChatGPT. (Open AI argued in legal filings that Raine misused the chatbot.)

The fight over AI could be a pivotal factor in this year’s midterm elections. Silicon Valley leaders say they plan to spend hundreds of millions of dollars to elect pro-AI candidates. But strategists on the left and right alike warn that a backlash is coming. “Politicians who choose to do the bidding of Big Tech at the expense of hardworking Americans will pay a huge political price,” says Brendan Steinhauser, a GOP strategist and the CEO of the Alliance for Secure AI.

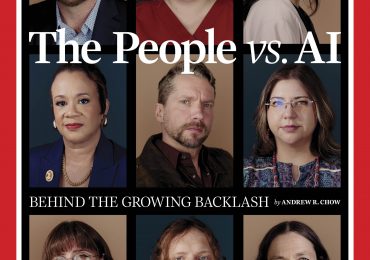

In what amounts to a survey of the battlefield, TIME spoke to a broad array of AI skeptics. What follows are their stories—nine Americans, from disparate regions, ideologies, and professions, but bound by a shared mission: to stop, or at least slow, a technology infiltrating almost every aspect of our lives.

—With reporting by Harry Booth

Francesca Hong

Gubernatorial candidate

For the past five months, Wisconsin state representative Francesca Hong has been crisscrossing the state in her run for governor. One of the biggest issues she keeps hearing about on the campaign trail? Data centers. “Folks are asking us: ‘What is your plan? How are you going to hold local government and these corporations accountable?’” she says.

Hong is among a growing contingent of 2026 candidates who are making data-center restrictions a key campaign promise. What used to be a niche issue is now a rallying cry, because so many voters are “concerned and pissed off” about the projects, she says. “They want Wisconsin to be a hostile environment to the construction of AI data centers.”

Hong, 37, doesn’t come from a tech background. Born and raised in Madison by immigrant parents, she has worked as a bartender, a line cook, a baker, and the chef and co-owner of the (now closed) Madison restaurant Morris Ramen. Over the course of the COVID pandemic, Hong became a leader in the local restaurant industry, fighting for emergency unemployment benefits. The experience spurred her to run for a seat in the state assembly, where she has now served for five years.

A democratic socialist, Hong has prioritized the issue of affordability for working-class people. The proliferation of data centers has become central to that conversation. She criticizes the tax breaks that many data centers receive to set up shop; the NDAs signed by officials that keep citizens in the dark on many details; and the failed promises of previous projects. In Mount Pleasant, Wis., the TV-manufacturing factory run by tech company Foxconn promised to create 13,000 jobs and required more than $1 billion in public spending before sputtering in value and being converted into a data center.

With that debacle fresh in mind, campaigns to defeat proposed data centers have cropped up across the state, successfully halting projects in Caledonia and Menominee. Hong co-drafted a statewide data-center moratorium bill in February. “We’re focused on strong local economies where there are long-term gains and positive impact,” she says, “and we’re not seeing that from places across the country that have constructed these AI data centers.” Hong says her stance reflects the position of the state. “It’s important to see this as a democracy issue,” she says. “Folks are exercising their right to have their voices heard.”

Michael Grayston

Pastor

Last May, Pope Leo XIV called out AI in his first address to the College of Cardinals. Its rise, he told the Catholic leaders, required the “defense of human dignity, justice, and labor.” Many other faith leaders from different denominations have since followed Leo’s lead, using their pulpits to warn against the potential of negative impacts from AI. That includes Michael Grayston, a pastor at LifeFamily church in Austin. “I am deeply concerned that AI can erode an individual’s sense of spirituality or connection to God,” he says.

Since November, Grayston, 44, has held forum discussions about AI at his church, which he hopes will raise awareness about harms, encourage congregants to advocate for guardrails, and foster personal bonds among one another.

Grayston does not believe AI is unequivocally bad: it could bring efficiencies and benefits to workers, he says. But he is much more concerned by the emotional and social cost of AI chatbots, particularly for vulnerable teenagers.

A July 2025 study from Common Sense Media found that half of 13- to 17-year-old respondents talked to AI companions at least a few times a month. Many now consult with bots not only for homework advice, but also for deep moral or philosophical questions that were previously directed at friends, family, or faith leaders. Grayston understands why. “If my teen were to ask me a question that would prompt a concern, I would dig deeper to try to find the source of the problem, which can be uncomfortable,” he says. “So why would they come talk to me?”

But an obsession with chatbots among teens has also been linked to tragedies, like the suicide of Sewell Setzer, a 14-year-old from Florida. Grayston sees the technology taking society down a dark path. “With social media, we are more connected than ever, but the relationships are becoming so shallow, which has led to this loneliness epidemic,” he says. “AI has just poured gasoline on that, and pushes us even more into isolation.”

Community is one of the key teachings of Scripture, Grayston says. “You will find some powerhouses in the Bible that were single. But what did Jesus, Paul, and others have in common? They had close bonds with those around them,” he says. “It’s not good for man to be alone.”

Alicia Johnson

Member of the Georgia Public Service Commission

The race for Georgia’s Public Service Commission (PSC), which regulates utilities, is typically a sleepy affair. Before last year, no Democrat had served on the board since 2006.

But in November’s elections, two Democrats—Alicia Johnson and Peter Hubbard—pulled off upsets to win spots on the commission. Georgians were fed up with rising electricity prices and the explosion in data centers across the state—and many thought those two factors were related.

Johnson, 52, who previously worked in health management and community development, campaigned partly on reining in the data-center build-out and forcing the projects to pay their own way. “Residential customers and small businesses are now bearing the cost for reliability and risk created by these massive server farms with companies who are already making billions of dollars,” Johnson says. “I don’t know about you, but I don’t want to pay for some billionaire’s server farm.”

Johnson’s victory reflects Georgia’s status as one of the nation’s most heated data-center battlegrounds. The pro-business state has handed out tax breaks and low electricity rates to dozens of data-center projects. The Atlanta metro area is the second largest data-center hub in the world behind Northern Virginia.

Studies have shown that residents in counties with data centers are facing higher power bills, in part because the cost of upgrades is being passed on to ratepayers. In Georgia, the PSC had approved six rate hikes from 2023 to 2025.

AI companies are aware of the problem. Since January, Microsoft, Anthropic, and OpenAI have pledged to pay more for electricity costs, moves applauded by President Trump.

But Johnson, the first Black woman to serve on the PSC, hopes the commission and lawmakers will go further, and push data centers to commit to using clean energy and contribute to the state’s grid stability by curtailing their usage during peak demand.

“Data centers are a real problem that we have got to solve quickly. The noise pollution and other harms are happening in real time,” she says. “The more people know about that, the more they will be engaged in the process.”

Justine Bateman

Filmmaker

In November, Amazon used an AI tool to dub English voice tracks for multiple Japanese anime series on Prime Video. The move was met with anger and ridicule. Fans called the dubs “soulless” and “embarrassing,” and the company quietly removed them days later.

For filmmaker and actor Justine Bateman, the incident was an example of how tech companies are increasingly using AI for storytelling—and making it worse in the process. “A lot of streaming companies have executives with no background in film who are very focused on generating volume. And AI is really good at doing that faster and cheaper than humans do,” she says. “But it will not automate anything exceptional. It’ll regurgitate the past, and spit out a Frankenstein spoonful of whatever you put into the blender.”

For the past several years, Bateman, 60, has been one of the leaders of an anti-AI resistance in Hollywood. She has a computer science degree and served as an adviser to the Screen Actors Guild during their 2023 contract negotiations, helping secure protections including a requirement for producers to get actors’ consent to use their likenesses for AI. She now runs Credo 23, a film festival in Los Angeles that showcases new movies produced without AI.

Last year, Credo 23 gave out more than $70,000 in grants to filmmakers. “We’re looking for filmmakers who are pushing the creative ball down the field, even as the field is being sold out from under our feet,” she says. “Myself and others are just carving a tunnel through, because the art of filmmaking is going to survive this, even if the business doesn’t.”

Plenty in Hollywood have taken the opposite tack. Disney took a $1 billion stake in OpenAI and allowed its characters to be used in Sora, the AI company’s short-form video-generation platform. Actors like Matthew McConaughey and Michael Caine have signed deals with AI voice generation platforms. Bateman understands why industry incentives are pushing studios toward AI: “If they’re just looking for stuff that is second screen and plays in the background, then why not? I mean, you have almost a fiduciary responsibility to utilize it, right?” she says.

But Bateman believes that soon enough, both creators and audiences will get sick of AI slop and demand a new way forward. “I think the audience wants something real. And if you’re a filmmaker who decides to use generative AI, you’re stopping your forward progress,” she says. “You’re giving up.”

John Palowitch

Researcher and tech organizer

The research scientist John Palowitch joined Google in 2017, hoping to use his technical expertise to improve the company’s search engine. But as the AI arms race escalated in 2024, his entire team was shifted over to work on Gemini, Google’s AI model. It became clear to Palowitch that Gemini was now central to Google’s mission. “Google leadership directives to us were all about ‘how can we encourage people to use Gemini for more and more things?’ From deciding what to cook for dinner, to what iPhone you want to buy, to what the news is saying,” Palowitch, 35, says.

The work of Palowitch—and more than 3,000 other Google colleagues—vastly improved Gemini’s capabilities, cementing the company at the frontier of the nascent technology. But the more Palowitch saw Google plow its resources into AI, the more disillusioned he became. While he loved how the company’s search engine helped connect humans with one another, in his view Gemini seemed to do the opposite: prevent people from exploring the fullness of the web. Palowitch also worried about users’ increasing dependence on the tool, which struck him as the point. “Much of the time it does give you the answer that you wanted,” he says. “But down the line, if we become too attached to these tools, there’s no way to break away from these large monopolies that host them.”

Palowitch was also aggrieved by Google’s growing role in warfare. In 2021, Google and Amazon signed a $1.2 billion contract to provide tech services to the Israeli government. As the war in Gaza intensified, the worker-led coalition No Tech for Apartheid led a sit-in of Google employees in 2024.

Palowitch signed up to be an organizer for the group. “I was working on something that I thought was good for the world, which is access to information,” he says. “I gradually became aware that this was dual-use technology, and that it could be used for surveillance, state violence, and genocide.”(Google says the contract was not for “military workloads.”)

Last September, Palowitch left Google, and he has since joined the nonprofit Internet Archive, making him one of a growing number of tech workers to defect from their companies and then go public with their concerns. He has continued his organizing work, connecting ex-colleagues with No Tech for Apartheid in order to build “worker power” at Google, he says. This February, he helped develop a campaign in which more than 1,200 employees protested the company’s ICE contracts.

Jordan Harmon & Mackenzie Roberts

Activists

In 2021, the Muscogee Nation bought a 5,500-acre ranch in Okmulgee County, Oklahoma. Officials emphasized the land would be used for “food security,” agriculture, hunting, and fishing. A cattle ranch set up shop, selling high-quality local beef.

So it came as a surprise to Jordan Harmon, a Muscogee Nation citizen, when she learned about a proposal last year to rezone the entire parcel as a technology park in order to build a hyperscale data center. Harmon (left), a policy specialist at the Indigenous Environmental Network, objected to the proposal both for its potential strain on local resources and what the project stood for. Representatives for the data center had not said how they would mitigate potential environmental harms or upgrade water infrastructure in an area already plagued by boil orders and service issues.

Harmon, 33, also saw the AI industry’s ravenous consumption of data and physical resources as an extension of a much larger, tragic pattern in American history. “They need these data centers for their programs like AI facial recognition to surveil and police people, including Indigenous people, who are also being detained by ICE,” she says. “This is all a part of the legacy of colonialism and imperialism.”

Harmon and her friend Mackenzie Roberts, a 27-year-old intake specialist at the Muscogee Nation Center for Victim Services, staged a series of town halls across Muskogee County. They drew crowds at colleges, community centers, and even a butterfly farm. At each stop, they found citizens, even typically apathetic ones, get fired up about protesting the proposal, Roberts says. Amid the outcry, the proposal was ultimately shot down in November by the tribe’s National Council. One of its representatives, Dode Barnett, watched livestreams of Harmon and Roberts’ sessions and voted no based on what she saw as the project’s lack of transparency, its attempted takeover of agricultural land, and the vociferous feedback she received from community members. “The amount of money that they’re saying that we could make is a large amount of money. But it’s also going to leave our land scarred, and we can’t ever get that back,” Barnett says. “This feels like a modern-day land run.”

Joe Allen

Writer and public speaker

Long before Joe Allen became Steve Bannon’s right-hand man on his anti-AI crusade, he was a rigger for concert artists like the Black Eyed Peas and Rascal Flatts. At sold-out arenas across the world, Allen would walk steel girders 100 ft. in the air to suspend lighting and audio systems. Watching shows from the rafters, he became disillusioned by what he perceived as the mind-numbing mass spectacle below. “My job was to put together and maintain a machine that was specifically designed to hold people in thrall—to make people less intelligent and less conscious,” he says.

Allen, 46, thinks similarly about AI. For five years he served as the “transhumanism editor” on Steve Bannon’s War Room, a political show led by President Trump’s former chief strategist. Some of the show’s most popular segments, Bannon has said, are Allen’s diatribes against Big Tech.

A recent poll from the Institute for Family Studies found that 78% of Trump voters want tech companies held liable for child harms. “From the standpoint of populists, this is all a horrific imposition,” Allen says. “It would be as if everyone was told that the future required them to snort OxyContin off of a toilet bowl.”

Last June, Allen and Bannon lobbied against the industry’s attempt to pass a 10-year federal moratorium on state regulation of AI, putting in calls to Republican Senators like Marsha Blackburn—who pulled her support from the bill at the 11th hour, causing it to fall apart. (Trump issued an Executive Order in December to challenge state AI laws.)

“Trump’s approach to artificial intelligence may be the most important facet of his career as President,” Allen says. “And right now, the Administration is defending predatory corporations and executives against the will of the people.”

In January, Allen left his position on the War Room. He now talks about issues with AI in speeches across the country and is helping launch a new AI-focused organization, Humans First. He knows AI regulation won’t cure America’s deep divides. “Should we manage to beat this back, we’ll still be stuck with each other,” he says. “But it’ll be one less character in the story.”

Hannah Drummond

Nurse

When Hurricane Helene barreled into North Carolina in 2024, Hannah Drummond, a resident nurse, worked through the disaster along with her colleagues at Mission Hospital in Asheville, tending to victims of the deadly storm. Conditions were bleak: The hospital lost running water and electricity, rendering computers useless and forcing workers to urinate in buckets. ER nurses cared for 10 patients at a time.

For Drummond, 35, the harrowing experience reinforced two beliefs: that hospital systems severely undersupport their workers, and that AI will never be able to replicate their work. “So much of what we do as nurses is in the intangibles: Is there a slight change in the pallor of your skin? Do you have fruity breath?” she says. “We don’t know any technology that can imitate that whatsoever.”

When she’s not caring for patients, Drummond has become an industry leader pushing back against the rapid rollout of AI in health care. A member of the National Nurses Organizing Committee, she helped nurses at 17 facilities in the HCA hospital system, including her own, win AI protections in their most recent contract, including a provision requiring hospitals to give RNs a say in how new technologies related to patient care are implemented. She’s also on North Carolina’s AI Leadership Council, which will issue recommendations this year for how to roll out the technology across the state.

Many hospitals, intent on saving time and cutting costs, have embraced AI tools. Dr. Mehmet Oz, the physician who heads the Centers for Medicare and Medicaid Services, told federal staffers that AI models may provide better care than humans and cost significantly less, sources told Wired. But Drummond says many nurses have found them unhelpful—or even harmful. In a 2024 poll, two-thirds of unionized RNs said AI undermined them and threatened patient safety. Drummond said one nurse told of an AI tool designed to automate shift handoffs that had assigned a patient with COVID alongside another who was immunocompromised, risking the latter’s life.

Drummond doesn’t want to ban AI from hospitals, but says there needs to be strict controls around its use.

“Everything that reaches patients in health care has gone through rigorous testing and is proven to be safe, effective, and free from harming us,” says Drummond. “Why would we cut out those same test points for this?”

Leave a comment